Making sure your website has good structure, forgetting about high quality content, is a key component to getting ranked high on search engines. Search engines discover content, not only from sitemaps that websites submit, but from crawl robots that crawl through entire websites. Just like anything in life, though, there is so much resources that a crawl robot can put to crawling through a website. This makes it of paramount importance that the crawlability of your website is as good as it can be, to make it as easy as possible for search engine crawlers to find all of your content.

Why Does Crawlability of a Website Matter?

As already mentioned above, search engine crawlers will go through your website in an attempt to find all of the content on it. It will land onto your homepage, and click onto every link. Each of the pages that opens, it’ll click onto every link on each of them pages, and so on and so forth.

However, the more clicks the crawl robot requires to find content, the harder it is for the robot to find the content. As well as this, if the content is harder for search engines to find, then the search engine will automatically assume that the content cannot be that important, so downgrades how important the content is, if it is to even rank it at all. For example, you’ll always find the most important content is one click from the homepage, which makes sense when you think of it. The more clicks away, the less important content seemingly becomes.

If you can make all of your content a few clicks, at max, away from your homepage, then web crawlers will be able to much easily crawl your full website and all of its content. This will result in:

- Search engines perceiving the majority of your low crawl depth content to be more important, which helps search engine rankings.

- The resources that go into crawling your website, from the search engine’s perspective, will be significantly reduced. This will help SEO, or allow more resource from the crawler to go finding harder-to-find pages that maybe it never found before.

- Web users will greatly appreciate being able to find content easier. A better user experience will result in improved SEO, and even improved ad performances too.

With this in mind, how do we go about reducing the crawlability of a website for the above benefits? Below are some of the best ways you can go about this.

Change your pagination to be crawl-friendly

Matthew Henry wrote a great article about how you paginate pages has the potential to significantly change how your content is crawled by a crawl robot.

If you use a ‘Next page’ pagination, and you have 100 pages, then the crawler will have to crawl 100 pages before it finds the end article. If you have a 1,2,3 **current page** 5, 6, 7 you reduce the number of pages crawled to get to the end page.

If you use First, 1,2,3 ** current page** 5, 6, 7, Last, then the number of pages to crawl to get to any of the 100 pages reduces even further.

This, to me, provides good reasoning to not use AJAX loaders for pagination. Although it is great to use AJAX pagination loaders, both for user experience and loading time, it has caused issues with Google in the past. The safest bet is to go for a pagination with the most amount of links, reducing the number of clicks to reach any content.

Add internal links throughout content

If you are unable to change the pagination, or you still find a lot of your content still has a high number of clicks to reach it (typically, this is 3 and more), then you will need to look to other methods to reduce the crawl depth of your website. Another way to do this is through internal linking.

As mentioned already, a crawl robot will open every link on a page to find as many pages as possible. If you have internal links in your content, you are increasing the liklihood of the crawl robot being able to find all of your pages.

For this reason, it is a great idea to never try to have orphaned content – this is the term denoted to content that has not been internally linked by any of the other on your website: it’s by itself.

Use categories on posts

Categories are a great way to increase the crawability of a website. What they enable is for the categorising of many articles, in one click, preventing crawl robots from having to find the content randomly.

Not only are categories good for crawl robots, they can be used in conjunction to improve SEO and user experience. For example, one of my website’s posts, on an analysis of a poem called Daddy by Sylvia Plath, has the following search engine result when web users search ‘daddy poem analysis’:

On a separate note, the links at the bottom are actually to H2 headings, such as the ‘Themes in Daddy’ appear the following link:

https://poemanalysis.com/sylvia-plath/daddy#Themes_inDaddy

This highlights the importance of using good headings in your content, since it can have the added bonus of adding links on search engine results pages.

Use tags on posts

Categories should be used on a high level. Coupled with optimised categories, it is a great idea to add tags to increase the crawlability of your website. This is because it provides articles another opportunity to group articles together, making them easier to find, both for web users and crawl robots.

For example, let’s try this with an example post of If by Rudyard Kipling. The category is ‘Rudyard Kipling’, enabling web users to explore any of his poems from the category page. For the tags, we could use the following below examples:

- Inspirational poem

- 19th century poem

- 1800s poem

- 1890s poem

- English poets

- Father-son poems

With each of these, there are going to be other poems that can share the same tags. This means that when it comes to crawl robots crawling, they will absolutely love the fact the articles have been categorised, not only by categories, but also by more specific tags. All in all, this will improve the crawlability of your website, whilst also having a better user experience.

In order to achieve this, it is important to make sure the tags are easily accessible. Some websites tend to like to stick tags on the post pages, either above or below the content. Whilst this is a great idea, it would be an even better idea to have some of your more important tags appear on your homepage, to reduce down the number of clicks to reach articles even further.

Ultimately, there are many things you can do to improve crawlability, with this article highlighting 4 effective ways of doing this. If you are unsure about how easy your website is to crawl, or what the crawl depth is for your website, I would recommend getting a site audit with the likes of SEMRush, or using crawlers such as ScreamingFrog or DeepCrawl.

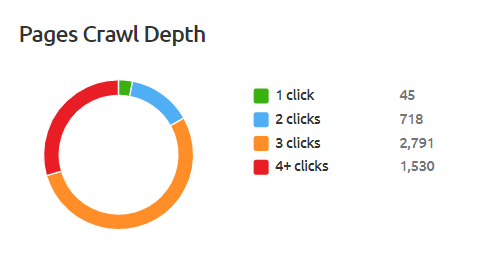

For example, I used SEMRush to provide an analysis on the crawlability of my website, of which I got the following results:

This makes it clear that around 4/5 of all pages are 3+ clicks, whilst a third is over 4 clicks. When a crawl depth looks like this, you know there is definite room for improvement, by implementing some of the above techniques!